Don’t Trust. Verify

NEAR AI is architected to deliver verifiable, secure AI.

Overview

Each operation is attested in real time, creating cryptographic proof that workloads are protected and unaltered.

We support both open and closed models through a single API, giving you the flexibility to build AI you can trust.

Private. Intelligent. Yours.

Confidential Compute From Edge to Inference

We leverage Trusted Execution Environments (TEEs) at both the CPU and GPU level with Intel TDX gateways and Nvidia Confidential Compute nodes. TEEs are secure areas of a processor that isolate data and code while they run.

They keep information encrypted in memory and inaccessible to the host system, including the cloud provider. Each operation inside the TEE can be verified through cryptographic attestation, creating proof that your workloads are protected and unaltered.

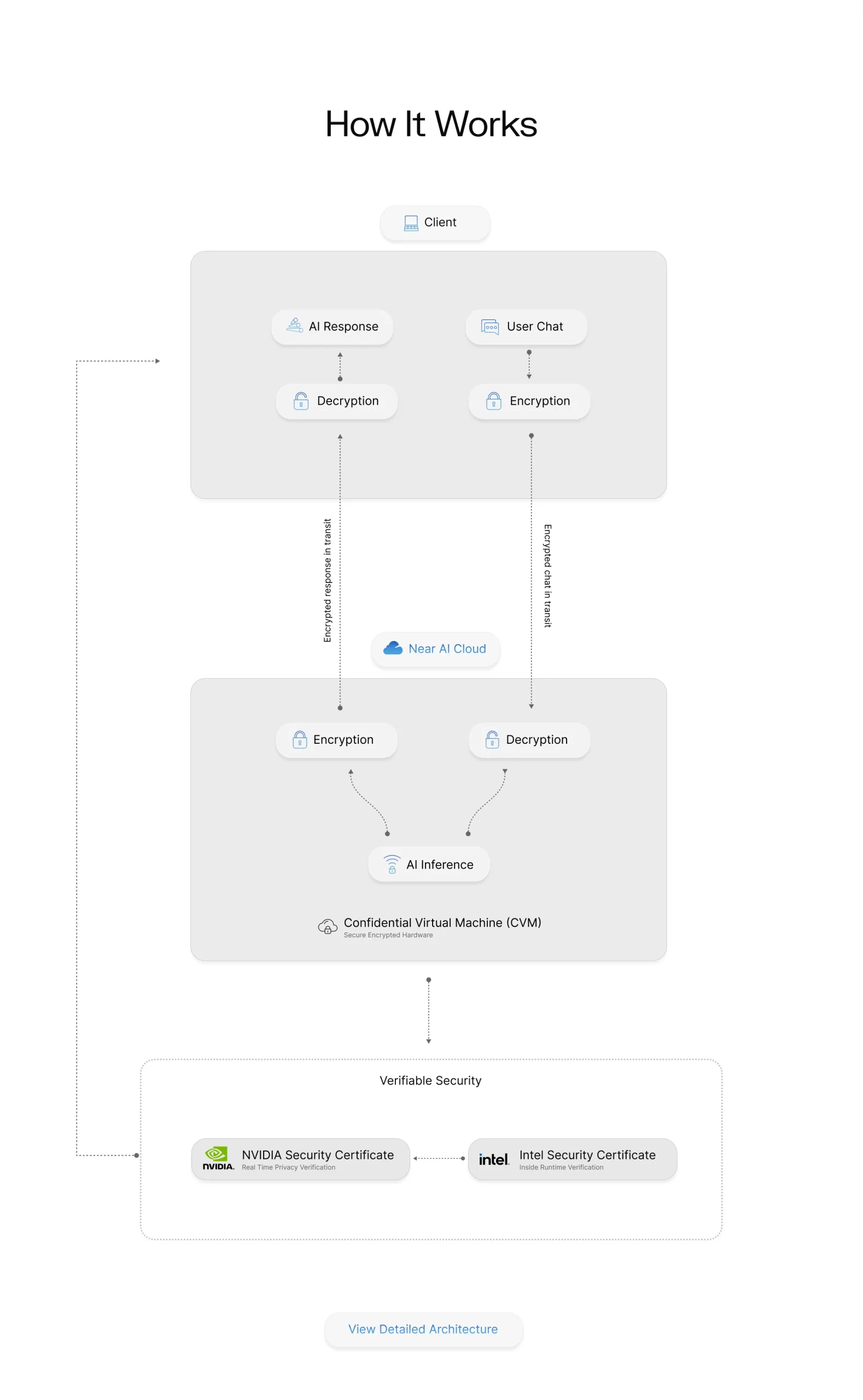

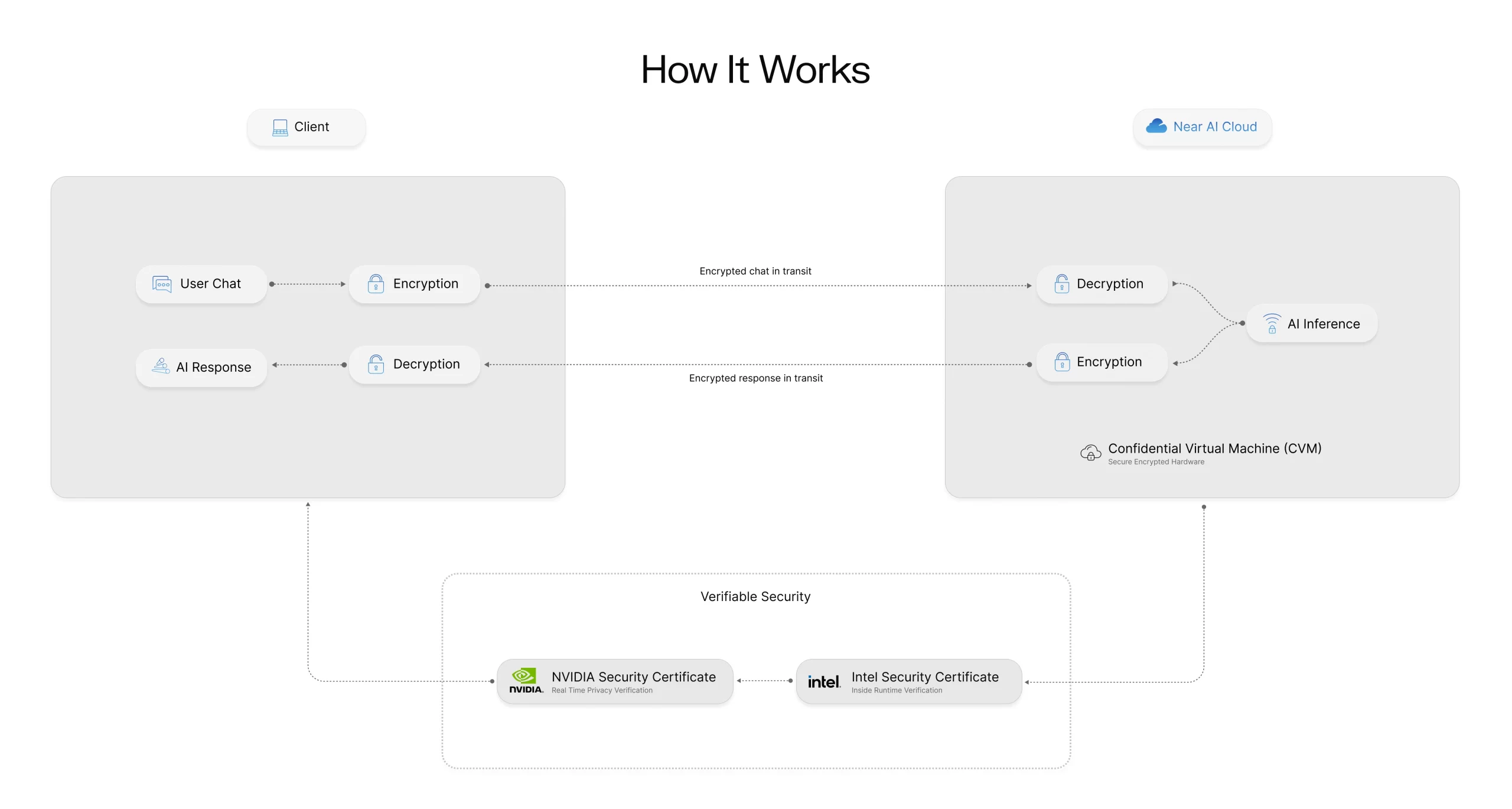

How It Works

Encrypted chat in transit

Encrypted response in transit

Verifiable Security

Make Sensitive Data Fast, Cheap, and Easy to Use

Go live fast.

Deploy private inference in minutes through a cloud-native API that connects directly to your stack.

Use the best model for every task.

Access open-source, closed-source, or custom models through one simple interface.

Security built into the silicon.

Every inference runs in isolated hardware, keeping data private by design.

Trust that’s proven, not promised.

Each request is verified as it runs, giving you confidence in every result.

Confidential AI without extra cost or complexity.

Built-in isolation and simplified compliance keep operations efficient at scale.